With billions of users worldwide, social media platforms face immense challenges in ensuring that content aligns with community standards, legal requirements, and ethical considerations. Content moderation includes the processes used to review, filter, and potentially restrict or remove harmful or inappropriate material to foster safe and respectful online environments.

Given these platforms’ reach and influence, moderation decisions have significant social, ethical, and legal implications. This blog provides a guide to understanding the content moderation landscape. It covers the underlying motivations for content policies, the processes involved, and the complex balance between fostering free expression and maintaining safety standards. By exploring the “why” and “how” of content moderation, it aims to shed light on the practices and challenges that define this essential area of digital governance.

What is generally banned on social media?

Social media platforms typically ban content that violates their community guidelines, legal standards, or both. Here are some common categories of banned content:

1. Hate Speech and Harassment: Content that promotes discrimination, hate, or violence against individuals or groups based on attributes like race, ethnicity, religion, gender, or sexual orientation.

2. Violence and Threats: Posts that incite, threaten, or glorify violence, including threats of harm against individuals, communities, or groups.

3. Adult Content and Sexual Exploitation: While some platforms allow limited forms of adult content, most prohibit explicit sexual material. All platforms prohibit child sexual exploitation and non-consensual intimate imagery.

4. Misinformation and Disinformation: False information that can cause harm, such as health-related misinformation or disinformation campaigns during elections, may be restricted or flagged.

5. Terrorism and Violent Extremism: Content promoting terrorist organisations, violent extremism, or content that glorifies terrorism or seeks to recruit individuals for violent causes.

6. Self-Harm and Suicide Content: Material that encourages or glorifies self-harm, suicide, or eating disorders is often removed or flagged, with supportive resources being provided.

7. Illegal Activities: Posts involving illegal activities, such as drug trafficking, human trafficking, or sale of prohibited substances and weapons.

8. Harmful or Deceptive Content: This includes scams, fraud, phishing, or any content intended to deceive or exploit users, such as fake giveaways or impersonation.

Other areas where platforms often have policies include Intellectual Property Violations. Copyrighted or trademarked content, like pirated movies, music, or unauthorised reproduction of artistic works, is typically banned to prevent infringement. Platforms also often have policies around Spam and Misleading Content. This includes spammy content, misleading links, or clickbait that disrupts user experience or misleads users to potentially malicious sites.

Each platform has its own policies and enforcement practices, which may evolve in response to regulations, societal concerns, and emerging online behaviour.

Platforms often remove content to protect users from harmful material like the ones listed above. Removing content can help platforms avoid legal liability for hosting unlawful material, such as copyright-infringing content or material that promotes violence. By moderating content, platforms can balance the need for free expression with their responsibility to protect users and maintain a positive space for engagement and users’ expression.

The descriptions of Facebook, TikTok and YouTube’s rules – and how they are applied – contained in this blog have been drafted by the Appeals Centre Europe based on the platforms’ policies published in November 2024.

Please note that this article has been drafted for informational purposes only and does not constitute legal or professional advice. For current versions of the platforms’ policies please follow the links below:

Why we exist

The Appeals Centre Europe was established to help users navigate and dispute content decisions by social media platforms, giving people more transparency, fairness, and control over the platforms they love and use daily.

If you disagree with a content decision by Facebook, TikTok, or YouTube, you can submit an appeal through our portal.

Content Removals on Facebook: What It Means and How to Handle It

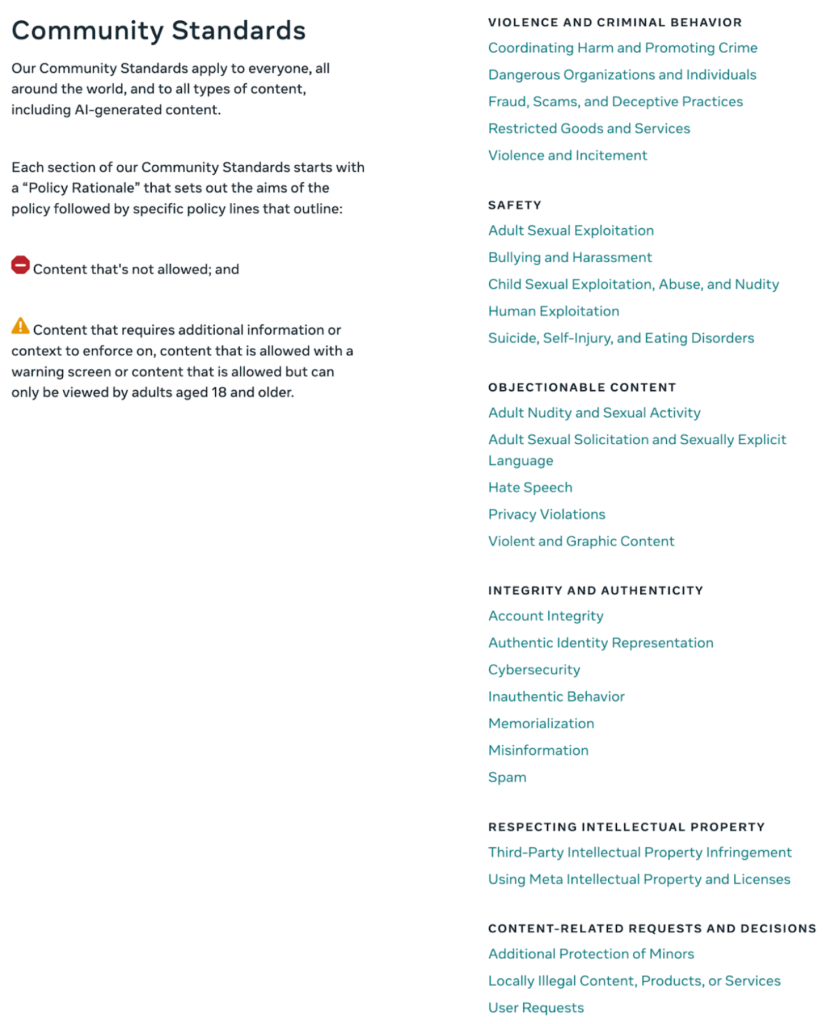

Since November 12th, 2024, Meta has unified the Community Standards used for evaluating content on its platforms, including Facebook and Instagram.

Meta’s content removal process is complex, and it can sometimes feel like the rules are not straightforward. Below, you can find some reasons why some content gets removed.

Why Facebook Removes Content

Facebook removes or limits content that might be perceived as breaking its rules on:

• Violence and criminal behaviour

• Safety

• Objectionable content

• Integrity and authenticity

• Respecting intellectual property

• Content-related requests and decisions involving minors, local regulations, and user request

You can view the full list of policies here

How Does Facebook Flag Content?

Facebook uses a blend of AI (artificial intelligence) and trained human moderators to scan and review posts. Here is a quick look at how it works:

• AI Detection: Automated systems are the first line of defence. They scan content for potential violations, flagging posts concerning language or images.

• Human Moderation: If the AI isn’t sure, human moderators step in to examine the situation more closely and make a final decision.

How to Appeal a Content Removal on Facebook

Appealing is easier than you might think! Here’s what to do if you feel Facebook made the wrong call:

1. Check Your Notification: You’ll get a notification when content is removed. This will usually include an option to appeal.

2. Appeal Facebook’s decision: Facebook’s Support Inbox keeps track of your appeals and provides status updates. Follow these steps to appeal Facebook’s decision through their internal complaints mechanism.

3. If – after these steps – you still get a negative response about the content moderation decision, you can appeal to the Appeals Centre Europe. You will need your Facebook reference number and some additional information that you can submit on our website once you start a dispute.

How to Appeal a Content Removal Decision by Facebook

If you are in the European Union, you can submit an appeal via our portal. While we accept decisions about content in all languages spoken in the EU, you’ll need to submit your dispute in English, German, French, Dutch, Italian or Spanish. If you want to learn more about the Appeals Centre Europe, visit our home page.

If you can’t find your reference number, please check this guide.

Start an Appeal – How to Find Your Facebook Reference Number

If your post, image, video, or comment has been removed from Facebook, or if you’ve come across content you believe should be taken down, you’ll need to provide us with a Facebook reference number to start the appeals process.

This number allows us to request details from Facebook about their decision. Without it, we will not be able to review your appeal.

To help you navigate this process, we have created this step-by-step guide.

What Is a Facebook Reference Number, and How Do You Find It?

When Facebook removes your content, or if you report someone else’s content, they will send you a notification outlining their decision.

If you are in the EU, this should include information about dispute settlement bodies and your Facebook reference number.

You can typically find this message in one of the following places:

• Profile Status: Log into Facebook and check notifications in your account.

• Business Support Home (for business accounts): Log in to your Business Suite to locate the relevant messages.

What if I Can’t Find My Facebook Reference Number?

No need to worry, there’s another way to retrieve it. You can request a Facebook reference number directly from Meta by following these steps:

1. Click this Meta Reference Number Request Link.

2. Log in to your Facebook account.

3. Fill out the required details on the form.

Once you’ve completed the form, Meta will provide the reference number you need.

How Do You Submit Your Facebook Reference Number?

Once you’ve got your reference number, go to our Case Portal to start your appeal. In Step 2: Platform Information, select “Facebook” from the dropdown menu. A field will appear where you can enter your Facebook reference number.

This step is crucial to ensuring that we can move forward with your appeal.

Where Can You Find More Help?

To help support you throughout the process, if you need further assistance:

• Visit Meta’s detailed guide on Facebook reference numbers.

• Follow our Facebook page for more tips, guides, and updates on digital rights and content moderation.

Taking the first step in an appeal can feel overwhelming, but with the right tools and support, you’ll be on your way to resolving your case.

Why TikTok Removes Videos (and How You Can Appeal)

TikTok’s moderation process can sometimes lead to unexpected video removals. If your video was taken down and you’re not sure why, you’re not alone! Let’s explore why TikTok removes videos, how to appeal, and what to keep in mind to keep your content live.

Why TikTok Removes Videos

TikTok follows a detailed set of Community Guidelines, with key reasons for removal including:

• Hate Speech & Harassment: Content targeting specific groups or individuals may be removed.

• Violent or Graphic Content: TikTok does not allow graphic or explicit material, even if it is intended as news.

• Misinformation: TikTok removes content that could be misleading.

How TikTok Moderates Content

Here is the lowdown on how TikTok reviews posts:

• AI Detection: Automated systems flag content based on certain keywords and patterns.

• Human Moderators: If a flagged video is not clear-cut, moderators step in for a final review.

How to Appeal a TikTok Video Removal

If you think your video shouldn’t have been removed, you can appeal it in the app:

1. Check Your Notifications: TikTok will notify you with the reason your video was removed.

2. Submit an Appeal: Within the notification, you will have an option to appeal and share more context.

3. Track the Appeal: You can check on the status of your appeal within the app.

Preventing Future Removals on TikTok

To avoid removals, avoid language and visuals that might trigger AI reviews, especially around sensitive topics. By keeping these tips in mind, you can keep your content active and enjoy a smoother experience on TikTok.

Further reading

We invite you to check the TikTok community guidelines, which explains how they moderate content on their platform:

YouTube Content Removal: Why It Happens and What You Can Do About It

If you are a YouTube creator, having a video removed can be a significant setback – especially if you’re not sure why it happened.

Why YouTube Deletes Videos

YouTube has Community Guidelines designed to keep the platform safe and engaging. Here are a few key reasons videos might get removed:

• Harmful Content: Videos that promote dangerous activities or harm others are typically flagged.

• Graphic or Violent Content: Explicit violence or graphic imagery is not allowed on YouTube.

• Spam and Misinformation: Content spreading misleading information, especially on topics like health, may be removed.

How Does YouTube Decide What Stays and What Goes?

YouTube relies on two main tools:

• Automated Detection: AI scans content for red flags, like dangerous stunts or prohibited imagery.

• User Reports: Viewers can report videos, and if enough people flag a video, it may be reviewed by a moderator.

How to Appeal if YouTube Deletes Your Video

If your video is removed, and you disagree, you can appeal the decision. Here’s a quick guide:

1. Check your email: YouTube will usually send an email explaining why your video was removed.

2. Submit an Appeal: Head to YouTube Studio, find your video, and explain why you think it doesn’t violate any rules.

3. Contact Support (if needed): For complex cases, you may want to reach out directly to YouTube’s support team.

Tips for Keeping Your Videos Safe

To avoid having your content removed, you should check YouTube’s guidelines on a regular basis – especially if you cover sensitive topics. This will help keep your content online and avoid unexpected interruptions.

YouTube’s Community Guidelines

When Does Content Moderation Become Censorship?

As social media evolves, so does the conversation around censorship. While platforms like Facebook, TikTok, and YouTube say they moderate content to provide a safe environment and keep their user experience positive, sometimes users feel that censorship limits their voice.

Content moderation on social media can sometimes feel like unjustified or unexplained censorship or banning. Let’s break down how social media content moderation works, share a few real-life examples, and talk about what to do if you are affected.

Why Content Moderation Happens on Social Media

If you believe you have been wrongly censored or banned on social media, here are a few common reasons content might be flagged or removed:

• User Reports: When enough users report a post, it is flagged for review.

• Community Standards: Each platform has its own set of rules that define what is acceptable.

• Legal Compliance: Platforms must follow national laws, so content that might be fine in one place could be restricted in another.

Real Life Examples of Social Media Censorship

Here are some examples where users felt that moderation went too far and became censoring or banning content:

1. Political Content: Posts about protests or political movements have been removed due to perceived risks or conflicts with policies.

2. Neutral reporting: In some instances, journalists’ reports on social media have been removed just for mentioning terrorist organisations.

3. Artistic Nudity: Art, especially if it includes nudity, can be flagged on platforms with policies around adult content.

What to Do if You Are Affected

If you find your content being removed and think you have been censored, here are some quick tips:

1. Appeal: Most platforms allow you to appeal. Take time to explain why you think the post did not violate their guidelines.

2. Review Community Guidelines: Familiarising yourself with the platform’s rules can help you understand what happened and avoid future issues.

3. Consider Alternatives: For users who want more control over their content, other platforms may provide different moderation policies.

Conclusion

While social media content moderation can feel like censorship, knowing the rules can help you navigate platforms confidently. Social media is always evolving, and being aware of the boundaries can help you share responsibly across different platforms.

Flagging content

Social media platforms offer tools that allow users to flag potentially harmful content as part of their efforts to maintain a safe and welcoming environment.

These tools empower users to report content they find offensive, harmful, or in violation of community guidelines, such as hate speech, explicit material, misinformation, or harassment.

Once flagged, content typically undergoes a review process, often involving automated systems and human moderators, to assess whether it should be removed or restricted. Flagging tools not only help platforms respond more quickly to “problematic content”, but also foster a sense of community accountability, as users play an active role in shaping a safer online space.

Sources

https://transparency.meta.com/policies/community-standards/

https://www.youtube.com/intl/ALL_ie/howyoutubeworks/policies/community-guidelines/

https://www.tiktok.com/safety/en

https://support.google.com/youtube/answer/7554338?hl=en-GB